Large polygons are a problem for geoprocessing operations. Intersections involving large polygons are slow because the entire geometry needs to be evaluated. In addition to this, spatial indexing doesn’t help (much) because the bounding box of the polygon is itself large.

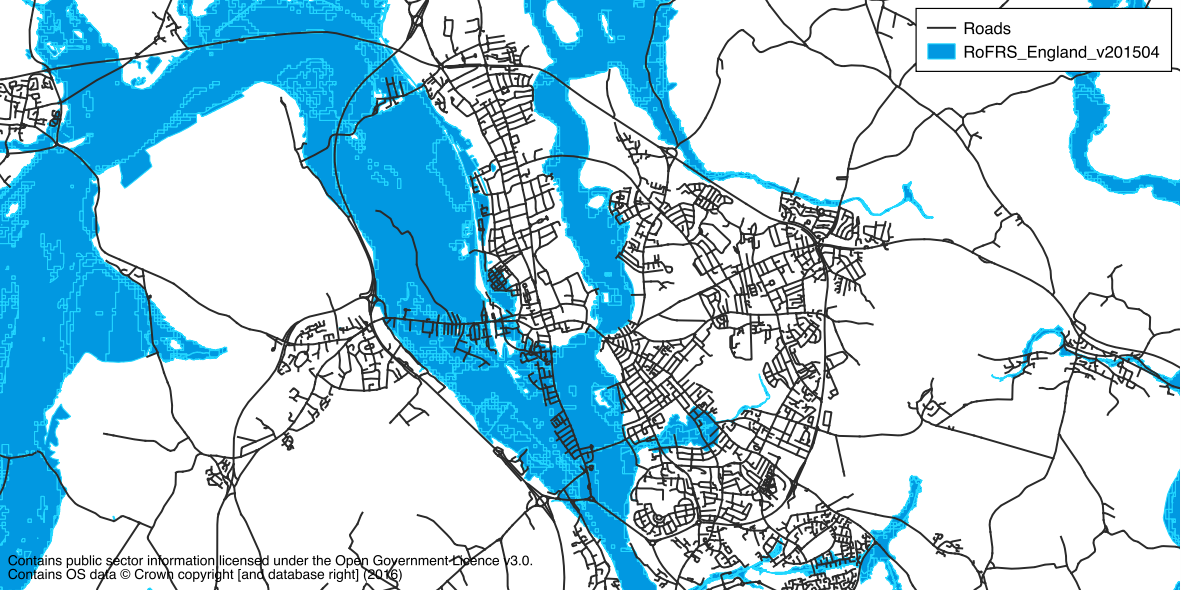

This is a common problem with flood extent data, which is exported from hydraulic models without much thought as to how it will be used. An example of this is the Risk of Flooding from Rivers and Sea (RoFRS) layer available from the Environment Agency (released under the OGL on EA Geostore). For example, this dataset has a single polygon for the entire River Thames upstream of Reading. This feature has 200,454 vertices and an area of 185 km²!

Another common problem with flood extent data is invalid polygons. Of the 2,129,750 features in the RoFRS data 16,974 are invalid (0.8%). Invalid geometries are a bad thing as they don’t always behave as expected. It’s possible to fix some invalid polygons by buffering them by zero, but this has it’s limitations. We’ll ignore this issue for now and focus on the first problem.

Splitting using a regular grid

One solution is to split these large polygons into smaller, more manageable chunks. The total area of the polygon is preserved, but intersections only need to query the locally-relevant data. There is an initial overhead to this, both in processing time and disk space, but subsequent queries will be much faster.

The original data can be split by intersecting with a regular grid or “fishnet”. It is guaranteed that none of the resulting geometries are larger than the grid size. This approach has two issues: 1) each grid cell needs to be intersected with the large polygon (it intersects the bounding box), and 2) sometimes polygons that are smaller than the grid size will be split just because of their position relative to the grid.

An example of this algorithm for a single geometry is given below, implemented using Shapely.

from shapely.geometry import box

def fishnet(geometry, threshold):

bounds = geometry.bounds

xmin = int(bounds[0] // threshold)

xmax = int(bounds[2] // threshold)

ymin = int(bounds[1] // threshold)

ymax = int(bounds[3] // threshold)

ncols = int(xmax - xmin + 1)

nrows = int(ymax - ymin + 1)

result = []

for i in range(xmin, xmax+1):

for j in range(ymin, ymax+1):

b = box(i*threshold, j*threshold, (i+1)*threshold, (j+1)*threshold)

g = geometry.intersection(b)

if g.is_empty:

continue

result.append(g)

return resultSplitting using an alternative method

An alternate method for splitting large polygons is proposed here. The polygon is first split into two parts across it’s shortest dimension (either left to right or top to bottom). If these parts are larger than a given threshold they are split again in a similar manner. This is repeated until all of the parts are the desired size. This method has two advantages over using a regular grid: the first division of the polygon requires the entire geometry to be evaluated, but the second considers each of the two parts independently, and so on, thereby reducing the effort required; and additionally, polygons already smaller than the size threshold are never split because the “grid” is aligned to each polygon individually, further reducing the effort required (and also producing a better result).

The algorithm is presented below. The function is named katana after the long, single-edged sword used by Japanese samurai.

from shapely.geometry import box, Polygon, MultiPolygon, GeometryCollection

def katana(geometry, threshold, count=0):

"""Split a Polygon into two parts across it's shortest dimension"""

bounds = geometry.bounds

width = bounds[2] - bounds[0]

height = bounds[3] - bounds[1]

if max(width, height) <= threshold or count == 250:

# either the polygon is smaller than the threshold, or the maximum

# number of recursions has been reached

return [geometry]

if height >= width:

# split left to right

a = box(bounds[0], bounds[1], bounds[2], bounds[1]+height/2)

b = box(bounds[0], bounds[1]+height/2, bounds[2], bounds[3])

else:

# split top to bottom

a = box(bounds[0], bounds[1], bounds[0]+width/2, bounds[3])

b = box(bounds[0]+width/2, bounds[1], bounds[2], bounds[3])

result = []

for d in (a, b,):

c = geometry.intersection(d)

if not isinstance(c, GeometryCollection):

c = [c]

for e in c:

if isinstance(e, (Polygon, MultiPolygon)):

result.extend(katana(e, threshold, count+1))

if count > 0:

return result

# convert multipart into singlepart

final_result = []

for g in result:

if isinstance(g, MultiPolygon):

final_result.extend(g)

else:

final_result.append(g)

return final_resultThe algorithm has been implemented recursively as this seem to make sense conceptually. This means that it might be limited by Python’s recursion depth limit, although this is unlikely to be an issue in practice unless working with very, very large polygons or very small thresholds. If this really is an issue it could be refactored to use a heap instead.

Benchmarking

The speedups achieved using either approach will be dependent on the data used and the questions being asked. In general, the more subsequent queries that are run the better the return on the initial investment.

The table below compares the two algorithms (and the “do nothing” approach) for the following example problem: how many roads are at risk of flooding in a given area. The data used were the RoFRS layer discussed previously, and the OS Open Map Local Roads layer for the SP tile (a 100x100km area containing 176,512 line features).

| Method | Time taken | Num. features | Num. vertices | Subsequent queries |

|---|---|---|---|---|

| None | – | 2,129,751 | 63,472,852 | > 2.5 hours * |

| Fishnet | 668 mins | 2,419,661 (+13.6%) | 65,214,478 (+2.7%) | 106 seconds |

| Katana | 42 mins | 2,213,649 (+3.9%) | 63,960,886 (+0.8%) | 106 seconds |

* After 2.5 hours the process was terminated, as the point has been made.

The first thing to note is that subsequent queries using both approaches are significantly faster than the “do nothing” approach. After the initial overhead the subsequent queries can make better use of spatial indexing, and where a detailed intersection does need to be performed the geometries are much less complex.

Both methods result in the same time taken for the subsequent queries. However, the time taken in preprocessing using the fishnet method is 16x slower than the katana method. Additionally, the number of additional vertices is less using the katana method.

License

The above code is released under the BSD 2-clause license:

Copyright (c) 2016, Joshua Arnott

All rights reserved.

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

- Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

- Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS “AS IS” AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.